I remember when I thought a gigabyte was a lot. I bought an external 1GB hard disk in 1995, and filled it up in no time at all. As geeky things go, it was pretty exciting.

Hadoop is designed for petabyte-scale data processing. Hadoop’s filesystem, HDFS, has a set of linux-like commands for filesystem interaction.. For example, this command lists the files in the hdfs directory shown:

hdfs dfs -ls /user/justin/dataset3/Like Linux, other commands exist for finding information about file and filesystem usage. For example, the du command gives the amount of space used up by files in that directory:

hdfs dfs -du /user/justin/dataset3

1147227 part-m-00000and the df command shows the capacity and free space of the filesystem. The -h option displays the output in human-readable format, instead of a very long number.

hdfs dfs -df -h /

Filesystem Size Used Available Use%

hdfs://local:8020 206.8 G 245.6 M 205.3 G 0%The hdfs command also supports other filesystems. You can use it to report on the local filesystem instead of hdfs, or other filesystems for which it has a suitable driver. Object storage systems such as Amazon S3 and Openstack Swift are also supported, so you can do this:

hdfs dfs -ls file://var/www/html the local filesystem

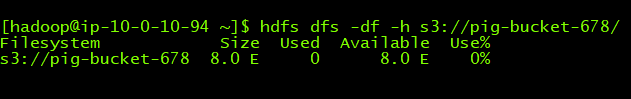

hdfs dfs -df -h s3://dataset4/ an Amazon s3 bucket called dataset4.Here is a screenshot showing the results of doing just that (from within an Amazon EMR cluster node).

It suggests that the available capacity of this s3 bucket is 8.0 Exabytes. This is the first time I’ve ever seen an exa SI prefix for a disk capacity command. As geeky things go, it’s pretty exciting.

I assume this is just a reporting limit set in the s3 driver, and that the actual capacity of s3 is higher. AWS claim the capacity of s3 is unlimited (though each object is limited to 5TB). AWS is constantly expanding, so it is safe to assume that AWS must be adding capacity to s3 all the time.

The factor limiting how much data you can store in s3 will be your wallet. The cost of using s3 is charged per GB per month. Prices vary by region, and start at 2.1c/GB per month (e.g. Virginia, Ohio or Ireland). For large-scale data, and for infrequent access, prices drop to around 1c/GB. Assuming you don’t want to do anything with your massive data-hoard.

Using “one-zone-IA” (1c/GB/month), it will cost US$ 86 MILLION a month to store 8 EB of data, plus support, plus tax. If you want to do anything useful with the data, a different storage class might be more appropriate, and you should also expect significiant cost for processing.

Justin – February 2019